1.Intro

Docker site: https://www.docker.com/From wikipedia:

Docker is an open-source project that automates the deployment of Linux applications inside software containers. Quote of features from Docker web pages:

Docker provides an additional layer of abstraction and automation of operating-system-level virtualization on Linux.[6] Docker uses the resource isolation features of the Linux kernel such as cgroups and kernel namespaces, and a union-capable file system such as OverlayFS and others[7] to allow independent "containers" to run within a single Linux instance, avoiding the overhead of starting and maintaining virtual machines.[8]

In shorts, docker is a way of creating containers, deploying applications into containers and running application inside container. What is container in a docker world? It's a some kind of virtual PC but very lightweight and easy to use.

2. Docker installation

Of course, first of all, docker has to be installed. On official site there are instructions for different operational systems: https://docs.docker.com/engine/installation/After installation you can run

# docker run hello-world

And result should be something like:

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

2. Main concepts/components.

In previous step, Docker's output gave a lot of information how it works:- where are docker images which are located in Docker Hub.

- if we don't have needed image locally - it will be pulled down from Docker Hub

- based on this image Docker is creating container which it may run

To check list of downloade images we can execute:

# docker images

In result will be present just downloaded hello-world image:

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest c54a2cc56cbb 6 months ago 1.848 kB

3. Test application for running inside docker container

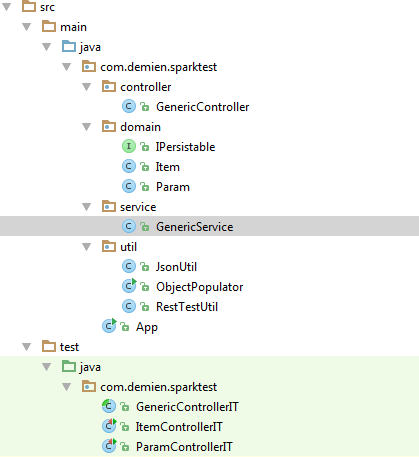

Now let's create a simple application(in JAR file) which we want to put inside a container.

I generated simple maven SpringBoot application on http://start.spring.io/ : I selected "Web" in dependencies and pressed button "generate project".

In downloaded project we need just 2 files:

pom.xml

- I removed test dependency and specified jar file name(MyTestApp):

<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.demien</groupId> <artifactId>demo</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>jar</packaging> <name>demo</name> <description>Demo project for Spring Boot</description> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>1.4.2.RELEASE</version> <relativePath/> <!-- lookup parent from repository --> </parent> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> </dependencies> <build> <finalName>MyTestApp</finalName> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> </project>

And Main application runner DemoApplication.java:

package com.demien; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; @RestController@SpringBootApplicationpublic class DemoApplication { @RequestMapping("info") public String info() { return "Hello world!"; } public static void main(String[] args) { SpringApplication.run(DemoApplication.class, args); } }

- I just added one endpoint: /info which is returning "Hello world!" message.

We can now run our application by running from command line: mvn spring-boot:run

and open URL: http://localhost:8080/info

- result should be "Hello world!"

4. Putting test application into container

4.1 Dockerfile

Now we have to create a container. First of all we have to create a file with container description. File name should be "Dockerfile" and it content in this example is:FROM maven ADD target/MyTestApp.jar /usr/local/app/MyTestApp.jar EXPOSE 8080CMD ["java", "-jar", "/usr/local/app/MyTestApp.jar"]

Let's explain it step by step:

FROM maven

- base image for container is MAVEN. List of all imaged can be queried by command "docker search XXX". For example:

# docker search java

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

java Java is a concurrent, class-based, and obj... 1246 [OK]

anapsix/alpine-java Oracle Java 8 (and 7) with GLIBC 2.23 over... 168 [OK]

develar/java 53 [OK]

isuper/java-oracle This repository contains all java releases... 47 [OK]

lwieske/java-8 Oracle Java 8 Container - Full + Slim - Ba... 30 [OK]

nimmis/java-centos This is docker images of CentOS 7 with dif... 20 [OK]

Next line from Dockerfile:

ADD target/MyTestApp.jar /usr/local/app/MyTestApp.jar- to image from previous step we have to add our application JAR file: file has to be taken from ./target directory and put into /usr/local/app directory in image filesystem

EXPOSE 8080- our application is using port 8080

CMD ["java", "-jar", "/usr/local/app/MyTestApp.jar"]

- command which will be executed when we will ask docker to run our container.

4.2 Building new container image

After Dockerfile creation, we can create a new image based on it content:#docker build -t demien/docker-test1 .

Docker will read Dockerfile in a current directory and create a new image: demien/docker-test1

During build phase, docker will download MAVEN image and all images on which it depends. Now we can execute again:

# docker images

- to check. New image should be present in result list:

REPOSITORY TAG IMAGE ID CREATED SIZE

demien/docker-test1 latest 108d927b5ebb 5 days ago 667.4 MB

<none> <none> ffd0c9386045 5 days ago 667.4 MB

<none> <none> b41d536b6005 5 days ago 667.4 MB

<none> <none> 9ce7e4000959 5 days ago 667.4 MB

<none> <none> 3a3615d1befb 5 days ago 667.4 MB

<none> <none> f5d4c54045dc 5 days ago 667.4 MB

<none> <none> 04dde1d25ea3 6 days ago 707.5 MB

maven latest a90451161cc5 9 days ago 653.2 MB

hello-world latest c54a2cc56cbb 6 months ago 1.848 kB

5. The end

We're almost done! Just one thing: we have to run our created image by executing from command line:#docker run -p 8080:8080 demien/docker-test1

Parameter "-p 8080:8080" means that we are redirecting our local port 8080 to port 8080 of container.

After start of our application we can open http://localhost:8080/info - result should be again "Hello world!". But this result we got from container: our request to endpoint "/info" on port 8080 was forwarded to port 8080 of our container which is running MyTestApp.jar located at it local directory "/usr/local/app" (we defined it by CMD ["java", "-jar", "/usr/local/app/MyTestApp.jar"]). And all this magic happen by Docker, invisible for us!

All source files can be downloaded from here.